Introduction

With the release of Alfresco Content Services 6.0, Alfresco moved from the traditional installer based deployment to a containerised docker deployment. Alfresco have been putting a lot of time into updating the Digital Business Platform to support a microservice architecture. This allows the system to be configured to scale out in parts that are under heavy load while scaling down areas under light load. An example of this might be to have multiple containers, supporting pdf transformations if your system did a lot of transformations of documents. Previously this would have required multiple content servers as all services for ACS were provided on a Content Server basis. Using containers to support different individual services is preferable to one Content Server service providing everything. Therefore, the new architecture should mean reduced cost in both infrastructure and software licenses.

In November last year, Alfresco announced that they have added reference helm charts specifically tailored for Amazon’s Elastic Container Service for Kubernetes (EKS). So we thought it would be apt to share our experience of working with ACS and AWS EKS. So we going to have an ACS and AWS EKS blog series which will mainly cover the following;

- Alfresco Content Services Helm Charts,

- Setting up of an EKS cluster in AWS

- Deploying Alfresco Content Services within an AWS EKS cluster.

- Deploying ACS on AWS EKS using RDS and S3

- Autoscaling ACS on AWS EKS

Alfresco Content Services Helm Deployment Overview

The out of box Helm charts are written specifically for deploying Alfresco within AWS. The charts deploy the following pods by default;

- Alfresco Repo App – Deployed in a 2 node alfresco cluster (2 pods)

- Alfresco Share App – Deployed in as a single pod

- Alfresco Digital Workspace – New ADF based front end which is deployed as a dependency requirement (that is there is no deployment charts for Alfresco DW in the helm charts)

- ActiveMQ – Deployed as part of the Alfresco Infrastructure dependency requirement and primarily used for transform service in this set up but used for message queuing in general.

- Transform Router – deployed as a single pod and acts as the glue between ActiveMQ and the various transform service (e.g libreoffice, tika …etc)

- Transform Service Pods (2 pods for each) – LibreOffice, Tika, ImageMagick, Alfresco PDF Renderer

- Shared File Store – Used to provide persistent volume for the transform service (Mount path is /tmp/Alfresco on the Shared File Store pod with the actual content on the EFS volume)

- Alfresco Search Services – Single pod deployed as dependency requirement without the Insight engine and persistent volume is defined and claimed on the worker node it’s is deployed on.

- PostgreSQL – single pod deployed as dependency requirement

Note

The Alfresco Infrastructure helm charts are primarily used to provide the PersistentVolume/PersistentVolume Claim and ActiveMQ. The other components (Alfresco Identity Service, Alfresco Event Gateway) are disabled.

AWS EKS Cluster

Amazon EKS is the managed Kubernetes service offering which allows you to create and manage a Kubernetes cluster without having to install your own kubernetes cluster. EKS runs the Kubernetes management infrastructure for you across multiple AWS availability zones to eliminate a single point of failure. Since we are going to use AWS Cloud Formation (CFN) template to create our EKS Cluster stack, let’s talk about a few pre-requisites before starting with the EKS cluster set up.

Pre-requisites

- Admin access to AWS resources

While not being ideal, we found that it’s the easiest way to get going when you have full admin access as there are so many services involved in getting the EKS Cluster up and setting the right permission/policy can be tedious.

- Existing VPC

We need an existing VPC which has 3 public and 3 private subnets. The requirement of having 3 public and 3 private subnets is purely because it’s the default AWS EKS template requirement. You can always modify the default template and use it to suit your requirement. Take note the VPC ID and Private/Public Subnet IDs as they’ll be required during the Stack creation.

- Elastic IP

At least one Elastic IP Address should available for the AWS account being used. By default only 5 EIPs are allowed per AWS account and you need to make request to AWS if you require more.

- Public/private key pair

An existing public/private key pair, which allows you to securely connect to your ec2 instance after it launches

- AWS cert

AWS certificate ARN is required when setting up the nginx-ingress controller since its uses an classic AWS load balancer (ELB) for redirecting traffic on secure port 443.

AWS EKS Cluster Set up

Once we have taken care of all the pre-requisites, we can move on to the next step and download the template that we are going to use to create our EKS Cluster.

The template can be downloaded from the following url;

https://github.com/aws-quickstart/quickstart-amazon-eks/blob/master/templates/amazon-eks-master-existing-vpc.template.yaml

Once we have the template, we need to log in to AWS Console and go to Cloud Formation. Then Click create stack and upload the template and follow the on screen instructions.

Note

AWS uses Lambda function to carry out the orchestration of creating and deleting resources for the EKS Cluster.

We used m5.xlarge for our cluster worker nodes as you need at least 16 GB of RAM for the pods to start properly

Once the EKS cluster has been created successfully, the following components on will have been created on AWS;

- Linux Bastion Server

Based on our stack, AWS creates a t2.micro Amazon Linux ec2 instance in the public subnet and attaches an Elastic IP to it. The bastion server acts an entry point into the EKS cluster with the required Kubenetes software such as Kubectl installed for interacting and managing the EKS cluster.

- Worker Nodes

Based on the NumberOfNodes selected during the creation of the EKS Cluster Stack, AWS will use the adequate AWS EKS AMI (for example in our case it used amazon-eks-node-1.12-v20190329 (ami-0f0121e9e64ebd3dc)) to create ec2 instances as our worker nodes in the private subnets. The worker nodes are Kubernetes ready and cluster aware.

- Security groups

Four security groups are created as follows;

- BastionSecurityGroup: Enables SSH access to bastion server

- ControlPlaneSecurityGroup: Enables EKS Cluster communication

- LambdaSecurityGroup-: Security group for lambda to communicate with cluster API server

- NodeSecurityGroup: Security group for all worker nodes and allow pods to communicate with cluster API server

Roles

The stack creates a total of 11 roles and most of which are roles created to allow lambda to manage the cluster resources such as deleting the Load Balancers, Security groups ..etc.

The role which we need in our case is the NodeInstanceRole. This is the role that is attached to the worker nodes and dictates which AWS resources the worker nodes have access to. So do take note of the exact name of the role as will need it in later steps.

S3 Buckets

The EKS Cluster Stack creates two S3 buckets which are as follows;

- KubeConfig Bucket: stores the Kube config file

- Lambda Zip Bucket: stores the lambda function package zip files such as Helm, KubeGet ..etc

So once we have all our EKS Cluster components in place we can move on with the next step which is setting up the EFS volume.

Setting up an EFS Volume

Amazon EFS provides shared file storage that supports concurrent read and write access from multiple Amazon EC2 instances and is accessible from all of the Availability Zones in the AWS Region where it is created. Alfresco uses the EFS volume for the contentstore, tomcat temp directory and contentstore cache if using S3.

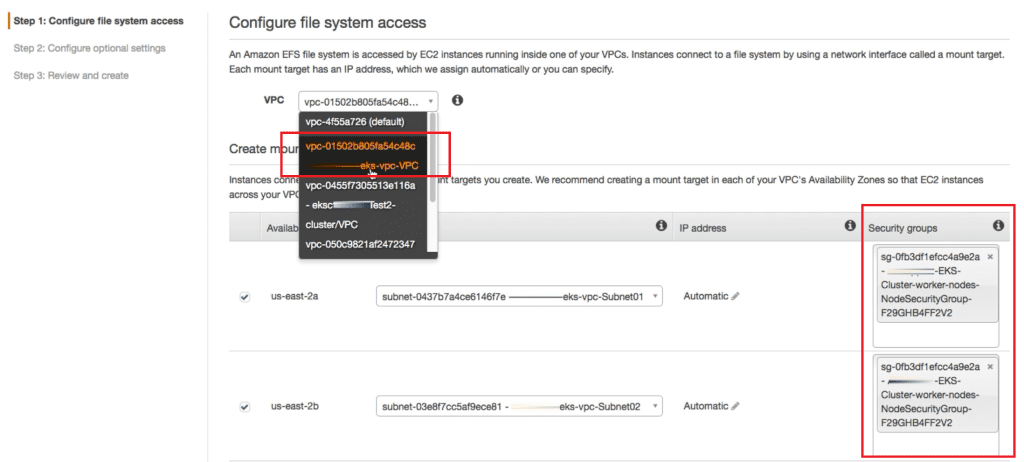

In order to set up the EFS volume, we need to go to https://console.aws.amazon.com/efs/ and click on Create File System.

Then we select the VPC ID we used for the EKS Cluster. By default, only the default security group is added in the EFS set up, so it’s very important that the NodeSecurity group that was created and attached to the worker nodes during the cluster creation is added. See example in below screenshot.

We can use the default values for the other fields and choose Create File System. Once the EFS volume is created it is important to note the DNS name. We will need it for the next section.

Setting up the Nginx-Ingress Service/Controller

For Alfresco Content Services to be accessible, we first need to install a nginx-ingress service/controller. It will create a web service, virtual LBs, and AWS ELB inside our desired namespace to serve Alfresco Content Services. We need to have our AWS Cert ARN, AWS Cert Policy and desired namespace (kubectl create namespace $DESIREDNAMESPACE) to set up the nginx-ingress service and used the following script to deploy the nginx-ingress service/controller in our cluster;

#!/bin/bash

echo "Setting environment variables..."

export AWS_CERT_ARN="arn:aws:acm:ap-southeast-2:xxxxxx:certificate/xxxxxxxx"

export AWS_CERT_POLICY="ELBSecurityPolicy-TLS-1-2-2017-01"

export DESIREDNAMESPACE="alfresco-dev"

echo "Deploying ingress charts..."

helm install stable/nginx-ingress \

--version 0.14.0 \

--set controller.scope.enabled=true \

--set controller.scope.namespace=$DESIREDNAMESPACE \

--set rbac.create=true \

--set controller.config."force-ssl-redirect"=\"true\" \

--set controller.config."server-tokens"=\"false\" \

--set controller.service.targetPorts.https=80 \

--set controller.service.annotations."service\.beta\.kubernetes\.io

/aws-load-balancer-backend-protocol"="http" \

--set controller.service.annotations."service\.beta\.kubernetes\.io

/aws-load-balancer-ssl-ports"="https" \

--set controller.service.annotations."service\.beta\.kubernetes\.io

/aws-load-balancer-ssl-cert"=$AWS_CERT_ARN \

--set controller.service.annotations."external-dns\.alpha\.kubernetes\.io

/hostname"="$DESIREDNAMESPACE.seedim.com.au" \

--set controller.service.annotations."service\.beta\.kubernetes\.io

/aws-load-balancer-ssl-negotiation-policy"=$AWS_CERT_POLICY \

--set controller.publishService.enabled=true \

--namespace $DESIREDNAMESPACE

echo "Deploying ingress charts... Done"

exit 0

Checking the nginx-ingress controller details

Check the nginx-ingress controller details using the following command and note the external-ip/dns as we’ll need it in the next section.

kubectl –namespace $namespace get services -o wide -w $releasename-nginx-ingress-controller

An example out will be as follows

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

lame-crocodile-nginx-ingress-controller LoadBalancer 10.100.235.176 xxxxxxxxxxxxxap-southeast-2.elb.amazonaws.com 80:31592/TCP,443:32124/TCP 7m app=nginx-ingress,component=controller,release=lame-crocodile

Note

Deploying the above nginx-ingress helm charts result in creation of an AWS classic ELB with security group assigned to it. And deleting this release results in the AWS ELB being deleted.

Conclusion

This blog discussed using Kubernetes to deploy Alfresco ACS into AWS using EKS. We have discussed the AWS prerequisites required to support the Kubernetes cluster, the creation of the cluster of the EKS cluster and the setup of the Ingress to access the ACS services. In the next blog we will discuss Deploying Alfresco Content Services into the AWS EKS cluster.